As Artificial Intelligence increasingly powers search engines, a sinister threat known as “Black Hat SEO poisoning” is emerging, deliberately manipulating search results to promote malicious content and degrade the accuracy of AI-driven information. This alarming trend poses significant risks to users and the integrity of online information.

Traditionally, Black Hat SEO involves unethical tactics like keyword stuffing, cloaking, and link schemes to trick conventional search algorithms into ranking low-quality or irrelevant websites higher. However, with the advent of sophisticated AI models in search, these malicious actors are evolving their techniques to specifically target the learning mechanisms of these AI systems.

Recent reports indicate a surge in campaigns where threat actors use Black Hat SEO to inject malware into AI-related search queries. Users searching for legitimate AI tools or information, such as “Luma AI blog,” are being redirected to deceptive websites optimized to rank highly through these illicit means.

Once clicked, these sites often employ multiple layers of redirection and obfuscated JavaScript to deliver malware payloads, risking data theft or system compromise for unsuspecting users.

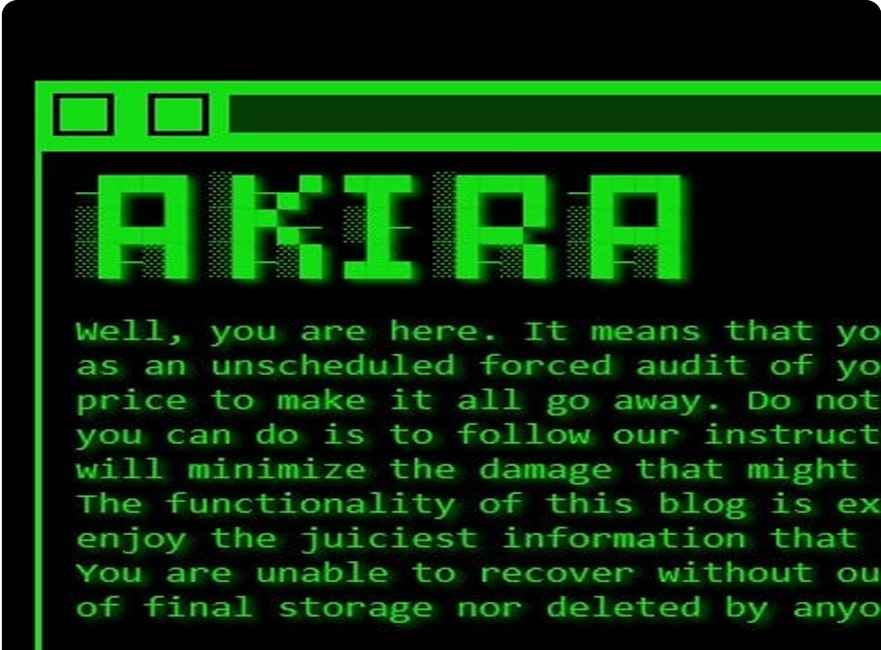

The danger lies in the ability of Black Hat SEO to “poison” the data that AI models learn from. By creating vast amounts of manipulated content, often using AI itself to generate seemingly legitimate but ultimately misleading text, these actors can distort the AI’s understanding of what constitutes reliable and relevant information.

This could lead to a future where AI search results are riddled with spam, misinformation, or even harmful content, eroding user trust and making it harder to find accurate answers.

Search engine providers are constantly battling these evolving threats, leveraging their own AI capabilities to detect and penalize Black Hat tactics. However, the cat-and-mouse game continues, with malicious actors continuously finding new loopholes.

For users, vigilance remains key: exercising caution when clicking unfamiliar links, especially for popular or trending AI topics, and ensuring robust cybersecurity measures are in place are crucial steps in navigating this increasingly poisoned digital landscape. The fight to maintain the integrity of AI-powered search is an ongoing one, demanding continuous innovation from both developers and users alike.

![Online Scam Cases Continue to Rise Despite Crackdowns on Foreign Fraud Networks [Myanmar] Online Scam Cases Continue to Rise Despite Crackdowns on Foreign Fraud Networks [Myanmar]](https://sumtrix.com/wp-content/uploads/2025/06/30-12-120x86.jpg)