Congressional leaders are ramping up efforts to acquire Artificial Intelligence (AI) as federal agencies and industry push for fast, safe implementation of AI technologies throughout government bureaucracies.

A hearing of the House Homeland Security cyber subcommittee last month demonstrated the vital need to infuse strong cyber security practices into AI development to support trust and national security.

Leaders underscored the quick development of AI, including emerging “agentic AI” capabilities, that require clear criteria for decision-making authority and security.

While companies urged the federal agencies to use AI to improve their cybersecurity, the talks highlighted the tricky balance between fighting threats with advanced technology and reducing exposure to risk.

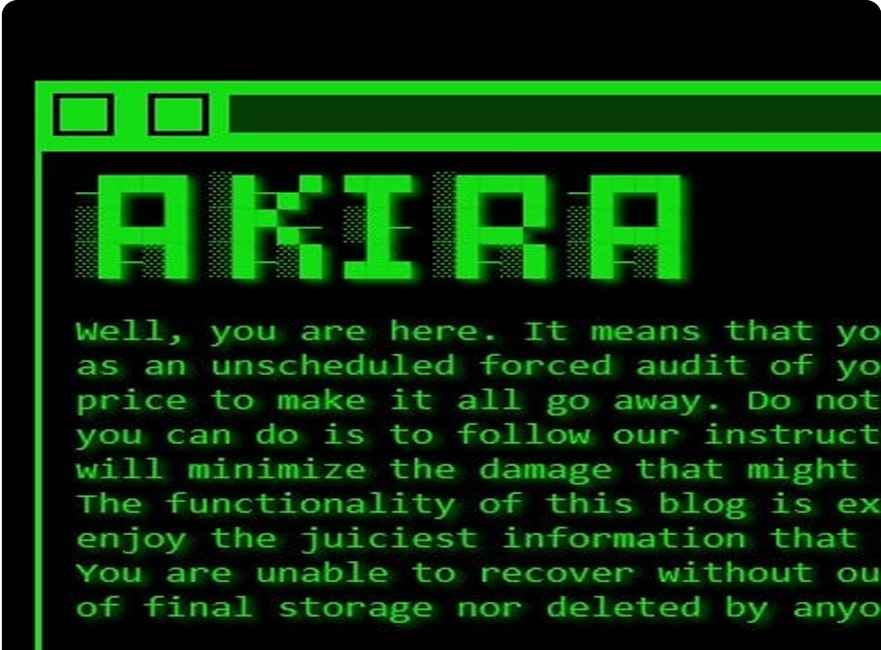

Further enhancing the already dynamic policy environment, new policy directives released in June 2025 by the Trump Administration have redirected some of the attention of the federal cybersecurity community.

This directive explicitly tells agencies to integrate vulnerabilities and compromises associated with AI software into existing vulnerability management processes and to ensure that relevant data sets are available to support cyberdefense research.

While it softened some earlier-announced requirements with respect to secure software development attestations, it continues to prioritize several other initiatives, including NIST’s current cooperation with the agency on updating its Software Development Framework and the FCC’s cybersecurity labeling program for IoT devices.

Additionally, CISA in May 2025 published extensive guidance for AI systems operators, including best practices for addressing data security risk at all stages of the AI lifecycle , such as integrity of the data supply chain, and protections against malicious modification.

On Capitol Hill, there is bipartisan support for the Advanced AI Security Readiness Act, introduced in the House. The bill’s purpose is to instruct the NSA to produce an “AI Security Playbook” that will help defend US-based AI systems against threats from foreign actors.

A contentious proposal from the House Energy & Commerce Committee, on the other hand, posits a decade-long ban on AI regulations at the state level saying it would ease IT modernization efforts at the federal level since there would not be a patchwork of conflicting state laws.

The collective shove reflects an increasingly popular view that while AI carries the promise of transforming the way government does business as well as improving federal government cybersecurity, its responsible use should be key. Problems persist in filling the AI skills gap at the state and local level as well as in creating uniform regulatory structures.

The back and forth underscores the urgent need for cohesive national standards and risk-mitigation efforts that can help ensure AI’s potential is leveraged in a safe, effective way at all levels of government.

![Online Scam Cases Continue to Rise Despite Crackdowns on Foreign Fraud Networks [Myanmar] Online Scam Cases Continue to Rise Despite Crackdowns on Foreign Fraud Networks [Myanmar]](https://sumtrix.com/wp-content/uploads/2025/06/30-12-120x86.jpg)